To be most effective, fake news needs to be spread through social media to reach receptive audiences. In this section, we explore how bots and flesh-and-blood people spread fake news; how cookies are used to track people's visit to websites, create personality profiles, and show them fake news content that they are most receptive to. We also look at trolls, that is, people who set up social media accounts for the sole purpose of spreading fake news and fanning the flames of misinformation.

Bots and Propagation of Fake News

What’s a Bot?

In addition to the billions of human beings using social media, there are also millions of robots, or bots, residing within. Bots help to propagate fake news and inflate the apparent popularity of fake news on social media.

Social media platforms (Facebook, Twitter, Instagram, etc.) have become home to millions of social bots that spread fake news. According to a 2017 estimate, there were 23 million bots on Twitter (around 8.5% of all accounts), 140 million bots on Facebook (up to 5.5% of accounts) and around 27 million bots on Instagram (8.2% of the accounts) [1]. That’s 190 million bots on social media – more than half the number of people who live in the entire USA!

Bots are not physical entities, like R2D2 in Star Wars. They’re created by people with computer programming skills, and reside on social media platforms, comprised of nothing but code, that is, lines of computer instructions. So, bots are computer algorithms (set of logic steps to complete a specific task) that work in online social network sites to execute tasks autonomously and repetitively. They simulate the behavior of human beings in a social network, interacting with other users, and sharing information and messages [1]–[3]. Because of the algorithms behind bots’ logic, bots can learn from reaction patterns how to respond to certain situations. That is, they possess artificial intelligence (AI).

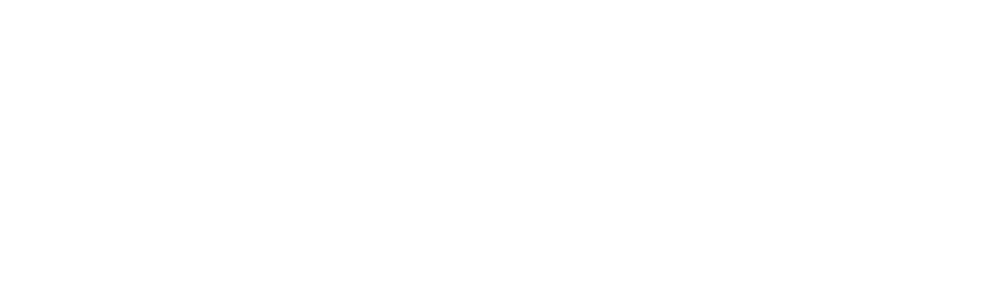

Source: Amit Agarwal's (2017) fairly accessible guide, "How to Write a Twitter Bot in 5 Minutes."

Source: eZanga

Artificial intelligence allows bots to simulate internet users’ behavior (like posting patterns) which helps in the propagation of fake news. For instance, on Twitter, bots can emulate social interactions that make them appear to be regular people. They look for influential Twitter users (Twitter users who have lots of followers), and contact them by sending them questions in order to be noticed and generate trust from them and from other Twitter users who see the exchanges take place. They respond to postings or questions from others based on scripts they were programmed to use. They also generate debate by posting messages about trending topics--Twitter always shows users what's trending--by hunting for, and repeating, information about the topic that they find on other websites [4].

How Do Bots Help in the Propagation of Fake News?

Bots spread of fake news, first, by searching and retrieving non-curated information (information that has not been validated yet) on the web. Second, bots post on social media sites continuously, spreading non-curated content using trending topics and hashtags as the main strategies to reach a broader audience which, in many cases, further helps the propagation of the fake news. So bots spread fake news in two ways: They keep "saying" or tweeting fake news items, and they use the same pieces of false information to reply to or comment on the postings of real social media users.

Bots’ tactics work because average social media users tend to believe what they see or what’s shared by others without questioning (or in this case, looking carefully at the user profile of the source of information) on Facebook, retweets on Twitter, trending hashtags, among others. So bots take advantage of this by broadcasting high volumes of fake news and making it look credible [4], [5].

But bots aren’t apparently that good at deciding what original comments by other users to retweet. They’re not that smart.

People are smart. But people are emotional. Real people also play a major role in the spread of fake news, too.

As information has come to light about bots’ role in the propagation of fake news, social media companies have tried to reduce the presence of bots and fake accounts on their platforms. For instance, in July 2018, Twitter began “removing tens of millions of suspicious accounts” [6]. Bots still exist, but most of the time, the spread of fake news is a consequence of real people, usually acting innocently. All of us, as users, are potentially the biggest part of the problem.

References

[1] C. A. de L. S. Berente Nicholas, “Is That Social Bot Behaving Unethically?,” Communications of the ACM, vol. 60, no. 9, pp. 29–31, Sep. 2017, https://10.1145/3126492.

[2] Y. Boshmaf, I. Muslukhov, K. Beznosov, and M. Ripeanu, “Design and Analysis of a Social Botnet,” Computer Networks: The International Journal of Computer and Telecommunications Networking, vol. 57, no. 2, pp. 556–578, Feb. 2013, https://10.1016/j.comnet.2012.06.006.

[3] F. Morstatter, L. Wu, T. H. Nazer, K. M. Carley, and H. Liu, “A New Approach to Bot Detection: Striking the Balance Between Precision and Recall,” in 2016 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining (ASONAM), Aug. 2016, pp. 533–540. https://10.1109/ASONAM.2016.7752287.

[4] E. Ferrara, O. Varol, C. Davis, F. Menczer, and A. Flammini, “The Rise of Social Bots,” Communications of the ACM, vol. 59, no. 7, pp. 96–104, Jun. 2016, https://10.1145/2818717.

[5] C. Watts, Extremist Content and Russian Disinformation Online: Working with Tech to Find Solutions. 2017. Accessed: Aug. 02, 2018. [Online]. Available: https://www.judiciary.senate.gov/imo/media/doc/10-31-17%20Watts%20Testimony.pdf

[6] N. Confessore and G. J. X. Dance, “Battling Fake Accounts, Twitter to Slash Millions of Followers,” The New York Times, Jul. 13, 2018. https://www.nytimes.com/2018/07/11/technology/twitter-fake-followers.html (accessed Aug. 02, 2018).

People Like You and the Propagation of Fake News

While bots spread a lot of fake news, the majority of fake news re-transmissions is committed by real people [1].

One thing about social media that makes humans susceptible to fake news is the popularity indicators that social network sites provide, that people use to signal approval for a message to other users. Research shows that, psychologically, more Facebook likes about a posting, more “thumbs up” votes about a video on YouTube, or more agreement on Yahoo!Answers that someone’s comment is useful, changes other readers’ perceptions about the quality of a message, and changes their minds about the topic that the message itself is about [2], [3]. Research by Tandoc et al. suggests an additional social dynamic from popularity indicators: “When a post is accompanied by many likes, shares, or comments, it is more likely to receive attention by others, and therefore more likely to be further liked, shared, or commented on” [4]. These patterns are true, whether news is fake or not.

But research also shows that people are more likely to share fake news than real news, for several different reasons. Research finds that the ordinary people have a hard time identifying false news, and that they don’t recognize their own inability to do so [5]. We tend to be overconfident in our ability to distinguish “real” from fake when it comes to online news information. This overconfidence leads to an increased chance of sharing fake news on social media platforms. Vosoughi et al. [1] found that real human Twitter users are 70% more likely to retweet fake news than truthful stories. People like novelty and they like to share it with others. Fake news stories—since the events or statements they describe never really happened—are always novel! (The information on "clickbait" that we presented earlier illustrates some of the ways that rumors and novelty get people's attention and are likely to be spread.)

Going further, Vosoughi et al.’s research highlighted the role emotions play when it comes to sharing news in social media. They identified that reading true news mostly produces feelings of joyfulness, unhappiness, expectation, and trust. Reading fake news produces feelings of amazement, anxiety, shock, and repulsion. The researchers suggest these emotions and feelings play an important role when deciding to share something in social media.

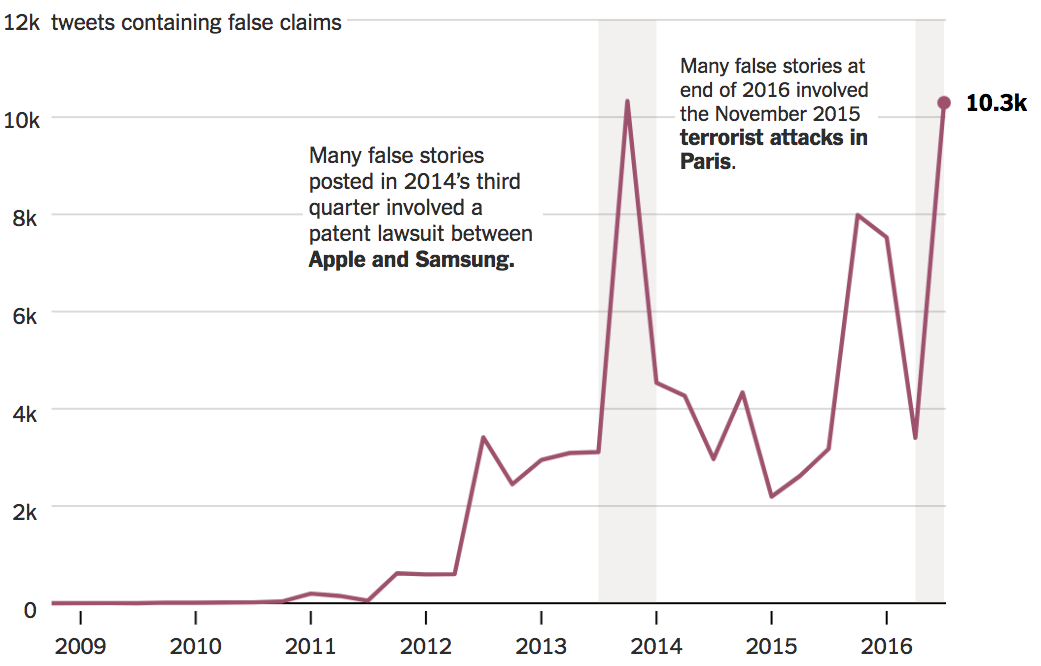

Not only are political topics shared by social media users. Fake news related to technology, urban legends, science, and business is also widely shared by people. Based on Vosoughi et al.’s research, the New York Times created a chart (below) showing the biggest fake news topic trends on Twitter between 2010 and 2016, illustrating that topics other than politics are also subject to deliberate misinformation [6].

Image source: New York Times

References

[1] S. Vosoughi, D. Roy, and S. Aral, “The Spread of True and False News Online,” Science, vol. 359, no. 6380, pp. 1146–1151, Mar. 2018, https://10.1126/science.aap9559.

[2] J. B. Walther and J. Jang, “Communication Processes in Participatory Websites,” Journal of Computer-Mediated Communication, vol. 18, no. 1, pp. 2–15, Oct. 2012, https://10.1111/j.1083-6101.2012.01592.x.

[3] J. B. Walther, J. Jang, and A. A. H. Edwards, “Evaluating Health Advice in a Web 2.0 Environment: The Impact of Multiple User-Generated Factors on HIV Advice Perceptions,” Health Communication, vol. 33, no. 1, pp. 57–67, Jan. 2018, https://10.1080/10410236.2016.1242036.

[4] E. C. Tandoc, Z. W. Lim, and R. Ling, “Defining ‘Fake News’: A Typology of Scholarly Definitions,” Digital Journalism, vol. 6, no. 2, pp. 137–153, Feb. 2018, https://10.1080/21670811.2017.1360143.

[5] B. A. Lyons, J. M. Montgomery, A. M. Guess, B. Nyhan, and J. Reifler, “Overconfidence in News Judgments is Associated with False News Susceptibility,” Proceedings of the National Academy of Sciences, vol. 118, no. 23, p. e2019527118, Jun. 2021, https://10.1073/pnas.2019527118.

[6] S. Lohr, “It’s True: False News Spreads Faster and Wider. And Humans Are to Blame,” The New York Times, Jun. 08, 2018. https://www.nytimes.com/2018/03/08/technology/twitter-fake-news-research.html (accessed Aug. 02, 2018).

Microtargeting and the Propagation of Fake News

How is Fake News Directed to Specific Types of People?

How do fake news websites target their audience and make sure that they send misinformation to which people are more likely to be receptive? One way is to use social media analytics [1]. To understand how analytics work, we need to explain how cookies work, then show how interest groups can use information provided by cookies to find a receptive audience for their messages.

Source: OSTraining.com

You’ve probably heard of cookies: Files that websites install on your computer to save your preferences and remember what you look at, shop for, etc. Once you agree to let a website that you visit install a cookie to your app or web browser, when you then make certain selections (e.g., what language you prefer) the cookie will tell the website to use the same setting when you visit it again. Websites will know it is you because the cookie saved in your browser has a unique ID.

Yet websites don't only save cookies on your computer for your convenience. Cookies on your computer system also track your actions on the web across all the websites you visit. Some of the cookies you store even come from websites other than the ones you’ve visited. Each website that’s configured to save these so-called “third party” cookies on your computer reports that your browser – identified by the unique ID of its cookies – has visited them. These cookies are called tracking cookies, or trackers for short. You might ask, who would be interested in tracking your every step on the web?

Many of the trackers come from companies that collect and analyze your web usage in order to personalize ads. Take Facebook, for example. Many websites other than Facebook include Facebook’s like button on them.

Every time somebody visits a website on which the Facebook button merely appears, that website (or more precisely, the programming code that’s associated with the button’s appearance on your screen) reports your visit to Facebook. This happens whether or not you press the like button, are logged into Facebook or not, or even whether or not you are a Facebook user. The advantage for the owners of the website are that when users like their website or post, they can reach a wider audience. Moreover, they can also look at Facebook’s reports to see who visited their website [2].

Facebook, Google, and other websites that provide trackers analyze the websites you’ve visited and also what you did while you looked at those websites—what you clicked on, how much time you spent there, what other pages you opened, etc.— by running scripts (little programs) in your browser, that you’re not even aware of [3]. The websites you visit, in combination with the actions that you take on those websites, give valuable information about you to other social media analytics firms. Based on these data, they calculate models to predict your interests and your purchase patterns, to select and deliver the kinds of advertisements you are most likely to react positively to. And Facebook can sell advertising to companies to promote products like the ones you looked at on other websites. Ever looked at, say, shoes on a shoe-selling website, then later seen shoe ads the next time you used Google or Facebook? That’s how the cookie crumbles.

While some people don't mind that their data are used to show them ads for products that they like and to make these ads look more appealing to them, others object to the practice. We should mention three concerns here. The first one is about the data collection itself. Most people aren't entirely sure what data are collected about them, and they don’t like it, in principle. A second concern relates to privacy. Many privacy advocates raise the point that it’s unclear to consumers who the data are shared with and how much control people have over their data once a company’s collected it. Hackers have stolen and sometimes even published personal data, which understandably raises concerns about the safety of one’s data after it’s been collected [4].

Not Everyone is in it for Promoting Harmless Products

A third concern has to do with what the data are used to do. Some media analytics firms don't want to sell you a product like shoes, a new laptop, or a phone. There are some firms that specialize in analyzing your data to convince you to vote for a political candidate. While this is similar in some ways to old-fashioned advertising campaign strategies, social media analytics allow companies to target you with greater precision. They know how to “push your buttons” and try to persuade you to vote for one candidate or another based on issues, and candidates’ positions on those issues, that they have reason to believe concern or appeal to you and others like you. They can make you see ads--or fake news stories--when you use the web or social media.

If you respond to these stories or ads—like them, or comment on them, for instance--the data about your preferences become even more refined and precise. It’s important to note that the media platforms don’t actually decide what to show you. But they know your profile of preferences and associations, and they sell advertisers access to people like you, who share your preferences and associations, and the advertisers’ messages are then shown to you, automatically, using the social media platform as a go-between [5].

Facebook’s advertising algorithms determine who is shown these paid ads, some of which might be fake news, intended to influence people’s political or other beliefs. “Microtargeting” is the term for using data to show certain messages specifically to those people who are likely to read, like, and share such a post, and click on the (fake news or other) website where it originated.

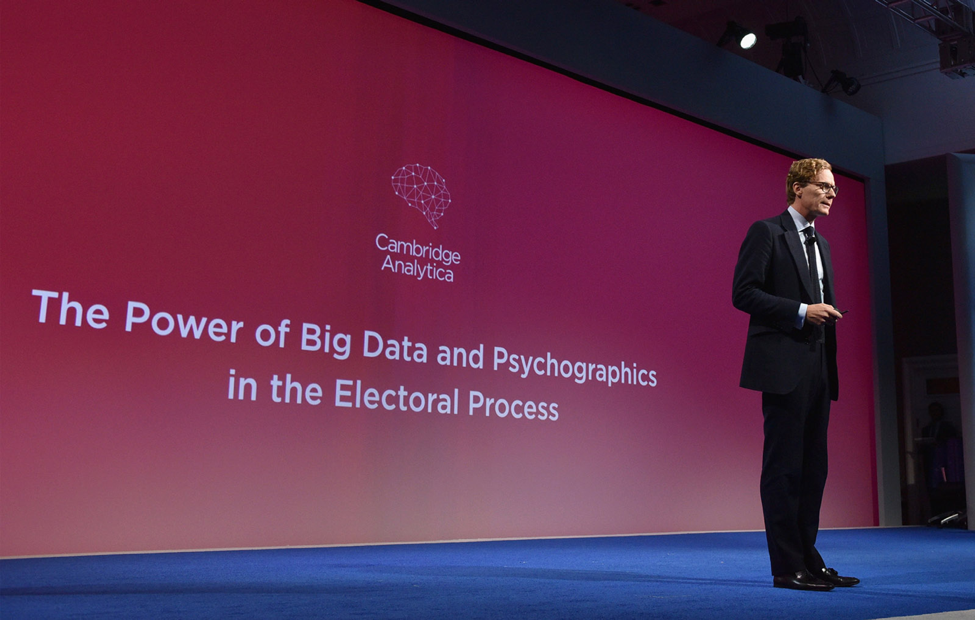

Cambridge Analytica

The CEO of Cambridge Analytica, Alexander Nix, describing what the company could do, in September 2016 (Image source: New York Review of Books)

Cambridge Analytica is a firm that rose to prominence because of its involvement in Brexit and the 2016 US presidential election. One of its most notorious members, Michal Kosinski, was a PhD candidate at Cambridge University in the UK when he developed the “MyPersonality” Facebook app in 2013. The app encouraged users to take quizzes that would generate for them, and for Cambridge Analytica, a personality profile. It used the so-called OCEAN personality profile, a common tool among psychologists to measure a person’s personality on five traits: Openness, Conscientiousness, Extraversion, Agreeableness, and Neuroticism. Unbeknownst to those who voluntarily took the quizzes, the app gathered much more information about them than just the quiz responses. It gathered data about things they liked on Facebook, what topical discussion groups they subscribed to on Facebook, and, without telling the users, similar information about all the users’ friends, who didn’t even know about the quiz or that their data were being harvested [6]. Kosinksi was able to connect people’s personality types, as revealed by the quiz they took, to what people liked on Facebook, to build refined models of what kinds of people political advertisers could reach based on what things they liked on Facebook.

American investor Robert Mercer, and the person who would become Donald Trump’s campaign manager, Steve Bannon, incorporated Cambridge Analytica in 2015. They used Kosinski’s method to analyze a variety of people’s psychological and political vulnerabilities from their Facebook usage. They figured out who was likely to respond best to what kind of campaign advertising. Utilizing their knowledge of people’s hopes, fears, and insecurities, information from Cambridge Analytica was used to sway many people’s opinions during the UK’s 2016 vote on Brexit and during the 2016 US presidential election. Many people have raised the concern that this microtargeting affected both those elections, and that a lot of the microtargeted messages were fake news. All of this is to show how social media analytics can be combined with fake news websites to micro-target groups for political and other kinds of advantage [5].

References

[1] “What are Social Media Analytics (SMA)?” technopedia. https://www.techopedia.com/definition/13853/social-media-analytics-sma (accessed Aug. 02, 2018).

[2] D. Nield, “You Probably Don’t Know All the Ways Facebook Tracks You,” Gizmodo, Jun. 08, 2017. https://fieldguide.gizmodo.com/all-the-ways-facebook-tracks-you-that-you-might-not-kno-1795604150 (accessed Aug. 02, 2018).

[3] N. Tiku, “You’re Browsing a Website. These Companies May Be Recording Your Every Move,” Wired, Nov. 16, 2017. https://www.wired.com/story/the-dark-side-of-replay-sessions-that-record-your-every-move-online/ (accessed Aug. 02, 2018).

[4] M. Curtin, “Was Your Facebook Data Stolen by Cambridge Analytica? Here’s How to Tell,” Inc.com, Apr. 11, 2018. https://www.inc.com/melanie-curtin/was-your-facebook-data-stolen-by-cambridge-analytica-heres-how-to-tell.html (accessed Aug. 02, 2018).

[5] J. Albright, “#Election2016: Propaganda-lytics & Weaponized Shadow Trackers,” Medium, Nov. 22, 2016. https://medium.com/@d1gi/election2016-propaganda-lytics-weaponized-shadow-trackers-a6c9281f5ef9 (accessed Aug. 02, 2018).

[6] E. Sutton, “Trump Knows You Better Than You Know Yourself,” aNtiDoTe Zine, Jan. 22, 2017. https://antidotezine.com/2017/01/22/trump-knows-you/ (accessed Aug. 02, 2018).

Trolls and the Propagation of Fake News

Trolls, in this context, are humans who hold accounts on social media platforms, more or less for one purpose: To generate comments that argue with people, insult and name-call other users and public figures, try to undermine the credibility of ideas they don’t like, and to intimidate individuals who post those ideas. And they support and advocate for fake news stories that they’re ideologically aligned with. They’re often pretty nasty in their comments. And that gets other, normal users, to be nasty, too.

The Russian Internet Research Agency—the one that produced a lot of fake news to try to affect the 2016 US election—has been supporting trolls, for years. They had their own agents create social media accounts long before the campaign season, who made their social media profiles look like typical Americans: They chose American names, put photos and descriptions of themselves (and their fake families) online, and they liked things and joined discussion groups. They issued innocuous messages for a time. They made friends and followers. They more or less infiltrated American social media space and lay low, until election season. Then they rolled out the nastiness, supported fake news, and fostered disbelief in real news stories. This is all very well documented in Congressional investigations and indictments handed down resulting from Robert Mueller’s investigation into possible 2016 election improprieties by Russia [1].

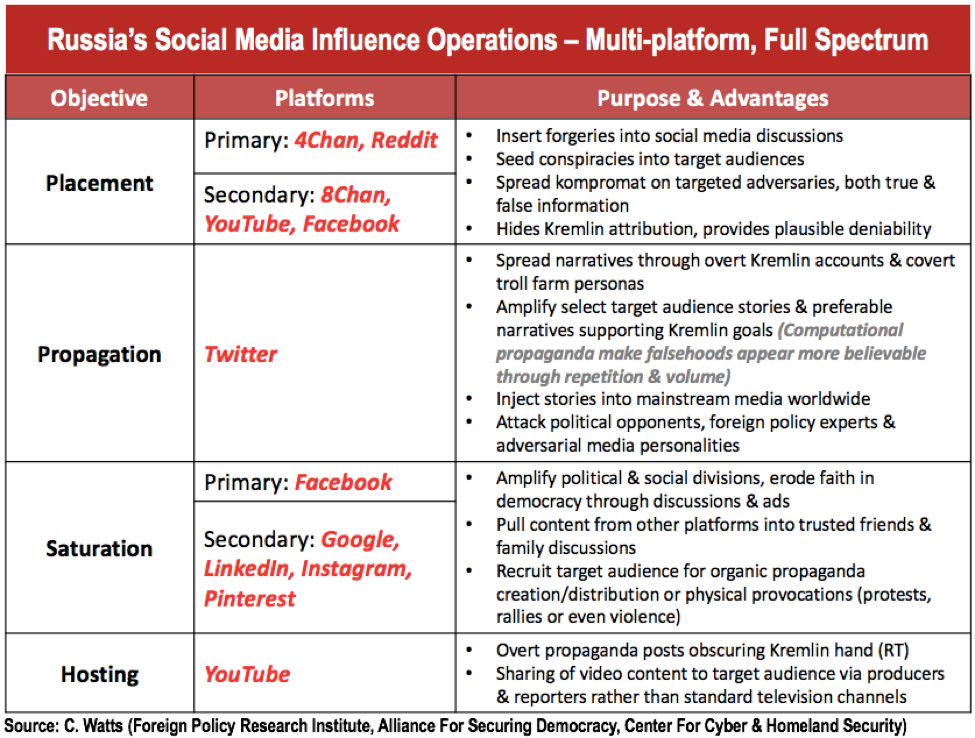

Here's a summary of Russia's Social Media Influence Operations, from a presentation to a U.S. Senate subcommittee by Clint Watts of the Foreign Policy Research Institute:

Not all trolls are Russian plants. We have our own home-grown variety, too [2]. We know less about them since what they do isn’t illegal, and they haven’t been formally investigated. To learn more about Russian trolls that still exist, and what you can do to avoid their influence, we recommend this 3-minute video from ACT.TV:

References

[1] United States of America v. Internet Research Agency et al. 2018. [Online]. Available: https://www.justice.gov/file/1035477/download

[2] L. Bénichou, “The Web’s Most Toxic Trolls Live in … Vermont?,” Wired, Aug. 22, 2017. Accessed: Aug. 07, 2018. [Online]. Available: https://www.wired.com/2017/08/internet-troll-map/